Vertexing Algorithms with the ATLAS Experiment

Science Undergraduate Laboratory Internship (SULI) program, Lawrence Berkeley National Laboratory (August 2017–December 2017).

Mentor: Maurice Garcia-Sciveres, LBNL Physics Division and ATLAS Collaboration.

Associate mentors: Simone Pagan Griso and Ben Nachman, ATLAS Collaboration.

Introduction

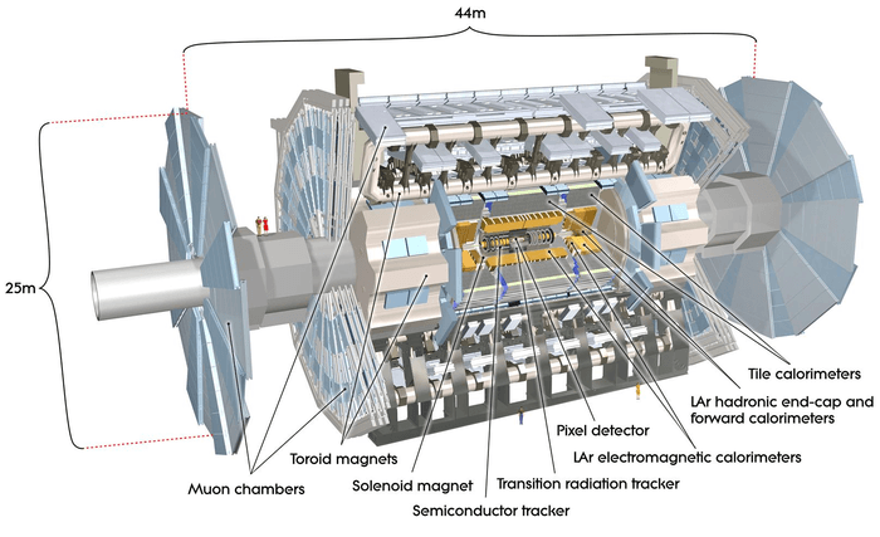

In particle accelerators like the Large Hadron Collider (LHC), physicists collide protons at very high energies. These collisions produce showers of high-energy particles which leave tracks of energy as they pass through detectors like those in the ATLAS Experiment, one of the two major experiments at the LHC. These tracks can be used to figure out where the protons collided in space and determine whether any special particles were produced in the collisions.

ATLAS regularly collides bunches of protons with a period of 25 nanoseconds, and each "bunch crossing" currently results in roughly 20-40 proton-proton collisions. All but one of these collisions are a source of noise and are known as known as pile-up. Therefore, a central challenge for ATLAS is the accurate reconstruction of vertices, the spatial locations of energetic proton-proton collisions. The process of reconstructing individual collisions and associating outgoing particles to them is known as vertexing.

Motivation

In the coming years, the LHC will be upgraded to significantly improve its data collection rate. Specifically, the HL-LHC (High-Luminosity LHC) upgrade will increase the rate of proton-proton collisions from around 40 per bunch crossing to over 200. This will increase the likelihood of observing a rare event but will also increase the relative proportion of pile-up so that events of interest become harder to distinguish from the background. In light of this, improved vertex reconstruction algorithms will be needed to keep up with the increased luminosity in order to continue processing the massive amounts of data being produced by the collider.

Tasks and Skills

Over the course of this internship, I ran some standard reconstruction algorithms on simulated collision data and then compared the reconstructions to the simulation input. Based on the algorithm output, I developed code to analyze the quality of reconstructed vertices using ROOT, a data analysis framework developed by CERN. With ROOT, I studied how consistently particle tracks could be associated to the correct vertices and in particular how well the vertex of primary interest, known as the hard scatter vertex, could be reconstructed.

I also documented my results via an internal research diary, sending regular updates to my mentors via email and Mattermost (a CERN-internal messaging system). I presented progress updates on my research at weekly group meetings at Berkeley Lab, as well as giving short (15–20 minute) presentations to the ATLAS vertexing group via teleconference. I also prepared a research poster for presentation at the fall intern poster session run by Workforce Development & Education at Berkeley Lab.

In April 2018, I traveled to Columbus, Ohio to give a presentation on my findings at the APS April Meeting 2018, sponsored by the Division of Particles and Fields. In this presentation, I discussed the differences between two algorithms commonly used for vertexing and argued that the newer imaging-based algorithm improved the spatial resolution of vertices, but still needed more optimization in terms of track association.

Results and Broader Impacts

In this work, we confirmed that overall hard scatter reconstruction efficiency remains extremely high even at the increased pile-up levels expected at the HL-LHC. Moreover, the hard scatter position can often be resolved to high precision, on the order of tens of microns. However, the quality of the hard scatter vertex in terms of correct track association is significantly impacted by the number of collisions per bunch crossing, so that track association suffers when the collision density is very high.

High-quality vertexing is important because it helps us to recognize when rare particles are produced in the detector and understand how they break down into other common particles. Accurate track-vertex association allows us to be more precise about experimental deviations from the theoretical predictions of the Standard Model of particle physics.

Documentation

- My final research writeup, Vertexing Algorithms with the ATLAS Detector for the HL-LHC Upgrade

- My research poster of the same name

- SULI Participant Vignette

- The abstract for my APS April Meeting presentation from Session S08: Detector R&D and Performance I.

- My presentation from the APS April Meeting 2018.